Supercharge Your Apps with AI-Powered Performance Optimization

Boost your app's performance and user experience across all platforms with Optiblack's AI-powered optimization solutions. Discover the benefits of...

Effective monitoring of microservices pipelines is crucial for reliability, performance, and user satisfaction in complex systems.

Monitoring microservices pipelines is essential for system reliability, performance, and user satisfaction. Here's why it matters and how to do it effectively:

Quick Tip: Teams using advanced monitoring and automation deploy updates 208x faster and resolve issues 40% quicker than those relying on traditional methods.

Keep reading to learn actionable strategies, tools, and real-world examples for effective pipeline monitoring.

Tracking the right metrics is essential to understand system health and performance. One of the most critical metrics is latency, which measures how long it takes for requests to complete. For user-facing services, keeping P95 latency below 500 ms is a good target.

Another key metric is the error rate, which reflects system reliability. An error rate above 0.1% could indicate underlying problems that need immediate attention.

Metrics like request rate and throughput help monitor traffic and capacity limits, while CPU and memory utilization provide insight into resource consumption. Service availability tracks uptime across distributed systems, and dependency and network metrics are vital in spotting issues in interconnected services. These can highlight connectivity problems that might ripple through the system.

Connecting these technical metrics to business outcomes adds even more value. For example, understanding how higher latency impacts conversion rates or how error rates affect customer satisfaction can turn raw data into actionable insights for business decisions.

These metrics form the foundation for implementing observability through its three core components.

Observability in modern microservices relies on three main elements: metrics, traces, and logs. Each serves a distinct purpose:

When integrated into a unified analytics dashboard, these pillars can reveal relationships between different data types. For instance, metrics offer a high-level view, while logs provide detailed context, and traces pinpoint the exact duration of specific actions. According to IBM, observability platforms that combine these elements can cut developer troubleshooting time by up to 90%. Aligning this approach with Service Level Objectives (SLOs) ensures a better customer experience.

To further enhance observability, centralized logging and distributed tracing provide deeper insights into system behavior.

Centralized logging and distributed tracing are powerful tools that complement the core observability pillars by offering a unified view of microservices interactions. Centralized logging simplifies the complexity of distributed systems by consolidating all log data into one place, making it easier to analyze and correlate across services.

Using correlation IDs, generated at the system's entry point (like an API Gateway), helps link logs across multiple services, providing a clearer picture of how different components interact.

Distributed tracing adds another layer by creating an audit trail that explains why issues occur. It works like a performance profiler for distributed systems, helping teams pinpoint bottlenecks and inefficiencies.

For example, imagine an e-commerce platform experiencing order failures during a flash sale. Logs might show timeouts at the payment gateway, metrics could highlight increased latency in payment processing, and traces might identify a bottleneck in the database connection pool. Together, these insights reveal that the payment service's connection pool is too small to handle the surge in traffic, prompting the team to scale resources dynamically.

A survey of 1,303 CIOs found that 90% of organizations are accelerating digital transformation efforts, with 26% expecting this pace to continue. This rapid change underscores the importance of robust observability to maintain system reliability.

Finally, structured logging makes log data easier to parse and query. By setting appropriate log levels to prioritize critical events, teams can focus their efforts on resolving the most pressing issues first.

Having a unified strategy for metrics and logging can make troubleshooting and maintaining systems much easier. When monitoring data is scattered across different tools, engineers often waste valuable time piecing together what’s going wrong. A centralized approach eliminates this hassle.

This strategy leans on the well-known observability pillars: metrics, traces, and logs. To make logs consistent and machine-readable, it’s a good idea to standardize them in JSON format and use correlation IDs through HTTP headers or message queues. This ensures data from different microservices can be easily connected and displayed on unified dashboards, which most monitoring tools can parse efficiently.

By centralizing logs into one system, teams create a single source of truth for the entire architecture. This consolidated view allows for event correlation across services, advanced analytics, and better security measures. For example, Probo slashed its monitoring costs by 90% after adopting a unified monitoring approach using Last9.

Automated health checks are a natural extension of unified logging, offering real-time validation of system performance. In today’s microservices setups, manual monitoring just can’t keep up with the growing complexity. These automated checks work hand-in-hand with centralized logging and tracing to quickly flag anomalies. Key metrics like uptime, response times, and API functionality are particularly important to monitor.

The frequency of these checks should match the criticality of each service. For highly essential components, frequent checks help catch issues early, while less critical services may require less frequent monitoring.

Modern CI/CD tools can integrate health checks directly into deployment pipelines, catching potential issues before they make it to production. Dependency testing is another layer of protection, verifying how services interact and reducing the risk of cascading failures.

Here’s a real-world example: an e-commerce platform with microservices for user authentication, product search, inventory management, and payment processing experienced a sudden spike in error rates within its payment service. Automated anomaly detection kicked in, triggering deep tracing and collecting detailed logs. The issue? A slow database response, not a failed external API, as initially suspected. Grouping alerts by business impact can also help prioritize responses, ensuring that revenue-critical or user-facing issues are addressed first.

Monitoring doesn’t stop at alerts - it’s an ongoing process that evolves with your architecture. Continuous monitoring involves regularly reviewing metrics to fine-tune observability. By analyzing both current and historical performance data, teams can spot usage trends, growth patterns, and subtle performance dips that might otherwise go unnoticed.

Forrester research highlights the payoff: every dollar spent on user experience yields an eye-popping 9,900% return. Tools like infrastructure as code (IaaC) and configuration management make it easier to dynamically adjust monitoring as conditions change. A service mesh like Istio can also enhance observability, adapting seamlessly when services are added, updated, or removed.

The benefits of strong monitoring are hard to ignore. Teams using microservices deploy 208 times more often and achieve 106 times faster lead times compared to those sticking with monolithic architectures, even though 87% of production container images still contain at least one known vulnerability.

Focusing on critical user journeys is another key aspect. Take an e-commerce app, for instance. Monitoring the checkout service is crucial, as it directly impacts revenue. Machine learning models can track throughput and error rates, detecting performance drops and automatically increasing logging detail to speed up diagnosis. Regular audits and feedback loops ensure monitoring strategies stay aligned with new features, evolving services, and shifts in user behavior.

The world of microservices monitoring has seen rapid advancements, with several standout tools leading the charge. Prometheus, for instance, is a go-to for over 70% of companies to track metrics, while Grafana is the choice of nearly 40% for creating insightful visualizations. Together, they form a dynamic pair for monitoring microservices effectively.

When it comes to distributed tracing, Jaeger is trusted by 28% of enterprises. It excels at following requests as they move through multiple services, making it easier to pinpoint performance bottlenecks. For log management, the ELK Stack (Elasticsearch, Logstash, and Kibana) remains a favorite, with 63% of organizations relying on it. Many users report that it cuts debugging time by as much as 70%.

Enterprise-level solutions like Dynatrace have also gained popularity, with 69% of Fortune 500 companies adopting it. Meanwhile, platforms like New Relic and Datadog combine metrics, traces, and logs into a single interface, making them appealing for teams that want simplicity and a fast setup.

A major shift in the field is the rise of OpenTelemetry, which is making it easier to switch between tools thanks to its vendor-neutral instrumentation.

Picking the right tools for monitoring microservices isn’t a one-size-fits-all process. Start by focusing on tools that integrate smoothly with your existing systems. Real-time data collection is a priority for over 60% of engineering teams, so ensure your tools can handle live monitoring without adding unnecessary delays.

Scalability is another critical factor. As your architecture grows, you’ll need tools that can manage increasing data loads, more services, and higher user traffic. Pricing models vary - some tools charge per host or service, while others use event-based pricing, which might be more budget-friendly for environments with high data volumes.

Ease of setup and ongoing maintenance is also worth considering. Open-source options like Prometheus and Grafana offer extensive customization but require technical expertise and regular upkeep. On the other hand, cloud-native platforms like Datadog are quicker to set up but may offer less flexibility.

The learning curve can impact productivity. Tools like Datadog and Last9 are designed to get teams up to speed quickly, while solutions like Prometheus and Grafana demand more technical knowledge but provide greater customization options.

Performance tracking is another must-have. Look for tools that monitor CPU usage, memory, response times, and error rates in real time. The ability to connect metrics, logs, and traces can significantly speed up debugging and uncover root causes.

Before committing to a tool, conduct trial runs to see how well it fits your team’s needs. The table below highlights some key features of popular monitoring tools.

| Tool | Strengths | Learning Curve | Pricing Model | Best For |

|---|---|---|---|---|

| Last9 | Comprehensive observability with intelligent alerts | Low | Event-based | Complex distributed systems, high-cardinality data |

| Prometheus + Grafana | Highly customizable | High | Open source (infra costs) | Teams with technical expertise |

| Datadog | User-friendly with broad integrations | Low | Per host/service | Teams needing quick setup |

| Lightstep | Deep analysis and change intelligence | Medium | Per service/seat | Teams managing frequent changes |

| Dynatrace | AI-driven automation | Medium | Per host/application | Large enterprises |

| Elastic Observability | Advanced search and analysis | Medium-High | Resource-based | Organizations using Elasticsearch |

| Honeycomb | Great for high-cardinality data | Medium | Event-based | Developer-centric teams |

The choice ultimately depends on your team’s specific requirements, technical skills, and budget. Tools with machine learning capabilities are becoming increasingly essential for detecting anomalies and providing predictive insights.

Additionally, FinOps integration is gaining attention as teams aim to balance performance with cost efficiency. Tools that provide visibility into both operational metrics and costs can help optimize spending and performance.

For teams dealing with constant changes, Lightstep offers advanced change intelligence, while developer-focused organizations might find Honeycomb useful for its ability to handle high-cardinality data. Large enterprises often turn to Dynatrace for its AI-powered automation.

The monitoring landscape is constantly evolving, with trends like shift-left observability bringing monitoring into earlier stages of development. Choose tools that not only meet your current needs but are also ready to adapt to the future of microservices monitoring.

Companies that excel in CI/CD deployment can roll out changes 208 times faster than their peers, and teams using automated workflows experience 106 times faster lead times from code commit to deployment. These numbers highlight the transformative power of automation in modern development.

Automated monitoring systems are essential for maintaining quality and reliability. They perform thorough testing across various levels - unit, contract, integration, end-to-end, and performance. This comprehensive approach ensures that both individual services and the overall system function smoothly. By adopting a shift-left testing strategy, teams can catch and resolve issues earlier in the development cycle, reducing code complexity in 85% of cases.

Beyond speeding up deployment, automation improves monitoring precision across multiple services, increasing deployment frequency by 70%. This is especially valuable in managing large-scale systems with dozens or even hundreds of services.

Security is another area where automation shines. Integrating automated security scans directly into CI/CD pipelines helps identify vulnerabilities before they reach production. Considering that 87% of production container images contain at least one known vulnerability, this proactive approach is crucial in preventing security mishaps.

Automation also minimizes downtime through features like automated rollbacks. When monitoring systems detect anomalies or failures, they can trigger rollbacks instantly, ensuring service availability without requiring manual intervention.

"Our move to CI/CD was revolutionary, streamlining processes and radically enhancing our responsiveness to customer needs." - Robert Martin, Banking Professional

Integrating automated monitoring tools into CI/CD workflows creates a seamless system where issues are addressed before they can affect end-users. This integration supports continuous testing by automating regression and performance tests within the pipeline, ensuring every code change is validated thoroughly.

The synergy between monitoring and deployment processes is key. Observability data directly informs deployment decisions, enabling smarter, faster actions. For instance, progressive delivery strategies like Canary and Blue/Green deployments depend on real-time monitoring metrics to decide whether to promote or roll back updates. These strategies ensure updates are safe and effective before they reach all users.

Netflix provides a standout example of this integration. By combining Jenkins and Spinnaker with monitoring tools like Prometheus and Grafana, the company reduced deployment time by 70% and increased deployment frequency by 50%.

Other teams using advanced platforms like Devtron report impressive results, such as a 40% reduction in mean time to resolution (MTTR), a threefold increase in deployment frequency, and the ability to onboard new services in under two days. These outcomes stem from treating monitoring as a core part of the development process rather than an afterthought.

Tailored dashboards that deliver specific insights for different teams further enhance pipeline monitoring. This level of integration sets the stage for leveraging containerization and orchestration to take monitoring to the next level.

Containerization and orchestration platforms, such as Kubernetes, have transformed automated monitoring for microservices. These tools provide consistency and reliability, enabling organizations to speed up deployment rates by 75%.

Kubernetes simplifies monitoring by standardizing environments and automating tasks like scaling and networking. For example, its Horizontal Pod Autoscaler (HPA) adjusts the number of instances based on real-time metrics like CPU usage or request rates. Similarly, the Vertical Pod Autoscaler (VPA) optimizes CPU and memory allocation for individual pods.

In high-traffic scenarios, Kubernetes can deploy multiple instances of monitoring applications across nodes, ensuring smooth performance. It also simplifies lifecycle management for monitoring components, from provisioning to deployment and eventual deletion.

Harri’s experience highlights these benefits. After adopting a containerized microservices architecture with Amazon EKS, the company achieved 90% faster cluster additions, reducing the time to just 20 seconds. Even during peak hours, Harri saw an 80% improvement in performance without downtime, all while cutting computing costs by 30%.

Kubernetes also ensures reliability with redundancy. If a monitoring service fails, the system automatically restarts it, minimizing disruptions and maintaining observability.

"Kubernetes achieves high reliability by enabling the declaration of redundant components. If a service fails, it automatically restarts, ensuring minimal disruption." - Kenn Hussey, VP of Engineering at Ambassador

"The primary reason Kubernetes automation is important is to mitigate human error and operational overhead." - Ju Lim, Senior Manager of Hybrid Platforms Product Management at Red Hat

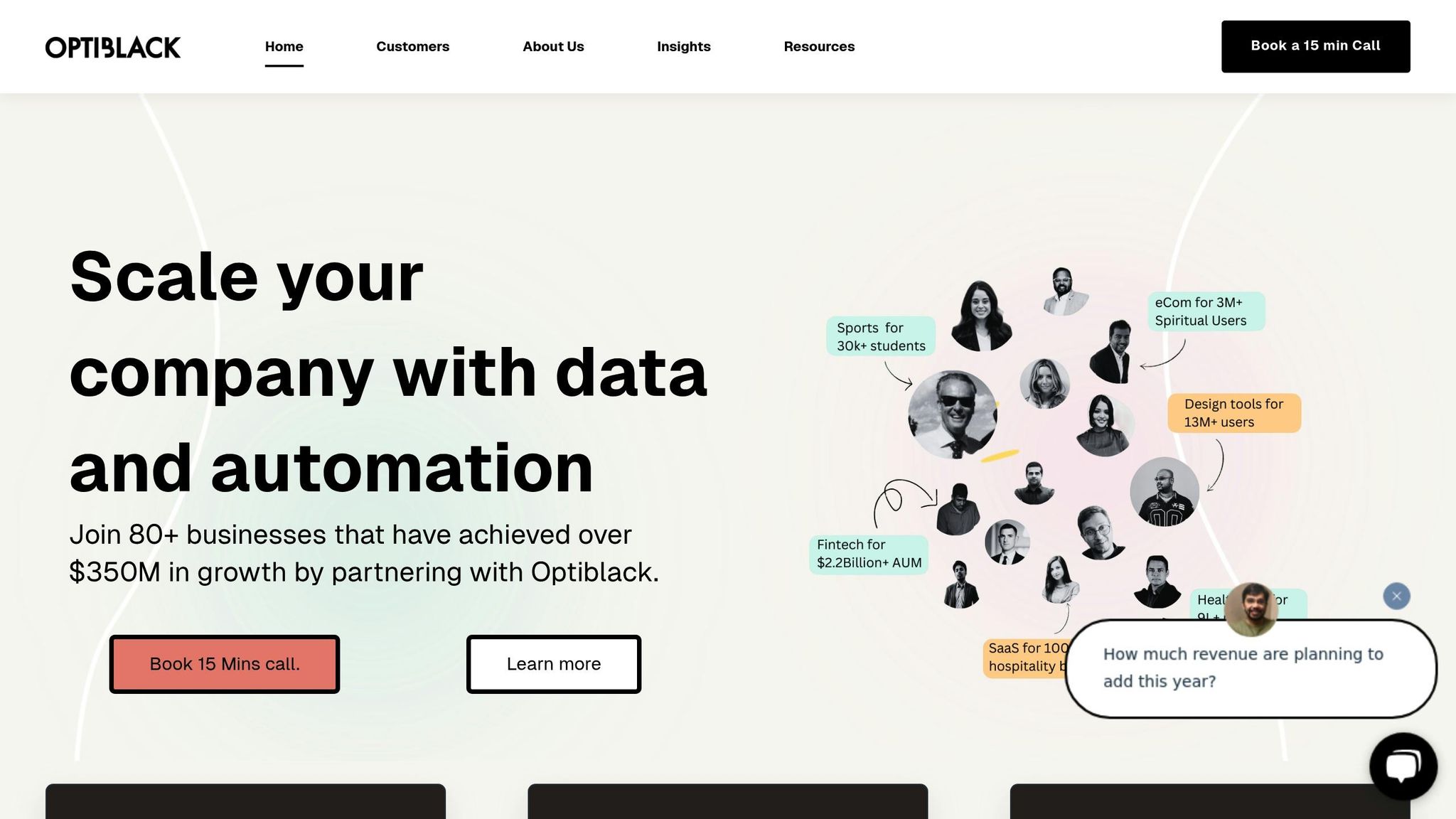

Optiblack specializes in creating scalable and efficient monitoring systems tailored for microservices. Their offerings are particularly suited for SaaS, eCommerce, Fintech, and Hospitality companies managing distributed architectures. With their expertise, Optiblack has supported over 80 businesses, contributing to more than $350 million in growth and helping manage 19 million users. These services complement the monitoring strategies previously discussed.

The Product Accelerator service is designed to address the skill gaps many companies face when implementing advanced monitoring solutions. By providing dedicated teams with specialized knowledge, this service enables companies to launch web applications three times faster and mobile applications twice as fast. For microservices, this speed translates into smoother integration of CI/CD observability tools and quicker deployment of automated monitoring solutions. The service also offers flexible staffing to scale monitoring capabilities as needed.

The process begins with an exploratory call, followed by finalizing project requirements, and onboarding a specialized team that works toward clearly defined milestones. The results speak for themselves. For instance:

"We needed a way to optimize our SaaS website. Since engaging with Optiblack we have seen a 102% increase in our MRR." - Joli Rosario, COO of TaxplanIQ

Similarly, Dictanote's CEO, Anil Shanbag, shared:

"We improved our trial rates by 20% in 1 week after working with Optiblack, leading to an increase in paid users."

Beyond immediate results, the service provides benchmarks and proven practices, eliminating the need for companies to develop solutions from scratch.

Building on the speed and efficiency of the Product Accelerator, Optiblack enhances monitoring through cutting-edge data infrastructure and AI-driven solutions. These services focus on improving observability and decision-making in microservices environments. Using machine learning and real-time data analysis, Optiblack's AI solutions optimize performance by analyzing user behavior, device performance, and network conditions. This enables proactive system maintenance rather than reactive troubleshooting.

The results are compelling. For example:

Optiblack also offers a Data Accelerator service, which uses AI to refine application code and data handling, improving speed and memory efficiency. Key capabilities include:

As Vishal Rewari from Optiblack puts it:

"Our solutions use machine learning and data analysis to make your app faster, more responsive, and better for users."

To maximize the benefits of these services, Optiblack recommends setting clear KPIs, integrating AI monitoring tools, enabling real-time data collection, and configuring adaptive alerts.

Pipeline monitoring plays a critical role in deploying modern microservices. The complexity of distributed systems requires a combination of continuous monitoring, automation, and observability to ensure reliability and improve performance.

Advanced observability doesn’t just improve uptime - it cuts downtime by 30% and reduces annual costs significantly. It also enhances system performance by 40% and speeds up feature releases by 20%.

By integrating automation and observability into CI/CD workflows, teams can create a strong foundation for success. High-performing teams using microservices are deploying 208 times more often and achieving lead times that are 106 times faster. This is largely because they can identify issues early in development and maintain consistent monitoring configurations across all services.

To stay competitive, organizations must adopt the three pillars of observability and use centralized monitoring systems that deliver real-time insights into key performance indicators (KPIs). Standardizing logging formats, tracing protocols, and metric labels across teams is essential for maintaining data consistency and simplifying troubleshooting.

Here are some important insights to remember:

Organizations should focus on building resilient services by incorporating features like circuit breakers, retries, and automated recovery processes. As microservices evolve, monitoring strategies must keep pace. The future lies in AI-driven analysis, edge computing, and unified observability platforms that seamlessly integrate metrics, logs, and traces. Adopting these practices will prepare organizations for emerging technologies and help them maintain a competitive edge in today’s digital landscape.

Integrating automation and AI into microservices deployment pipelines brings a host of advantages. By automating repetitive tasks, these tools speed up release cycles and cut down on human errors, leading to smoother, more dependable deployments.

AI also steps in with real-time monitoring, quickly identifying and addressing issues to keep systems stable and reduce downtime. On top of that, automation streamlines complex workflows, lowers deployment risks, and boosts efficiency, making software delivery faster and more reliable.

Centralized logging and distributed tracing are essential for enhancing microservices observability, offering a clearer picture of how your system operates and performs.

With centralized logging, logs from all services are collected in one platform, making it simpler to search, correlate, and analyze events. This approach streamlines troubleshooting, strengthens security measures, and speeds up issue resolution.

Distributed tracing complements this by tracking requests as they move across multiple services. It reveals how different components interact, helping to pinpoint bottlenecks or failures. This makes diagnosing and addressing performance issues much faster.

When used together, these tools give you a holistic understanding of your system, improving reliability, operational efficiency, and transparency.

When choosing monitoring tools for a microservices architecture, there are several important aspects to consider. First, look for tools that provide distributed tracing - this feature allows you to follow requests as they move through various services, making it easier to identify and resolve problems.

Scalability is another key factor, as your system's complexity is likely to increase over time. Tools that can handle growing demands without compromising performance are a must. It's also important to select tools that deliver detailed data collection and analysis, enabling your team to gain deeper insights into system performance.

Advanced alerting capabilities with customizable thresholds can help your team address issues before they escalate. Finally, prioritize tools that integrate smoothly with your existing systems and are user-friendly. This ensures easier deployment and management, allowing your team to maintain reliability and respond to challenges effectively.

Boost your app's performance and user experience across all platforms with Optiblack's AI-powered optimization solutions. Discover the benefits of...

Torus Digital and Optiblack implemented Mixpanel analytics, enhancing user experience and data-driven decisions, leading to product excellence and...

White Space Solutions partners with Optiblack to launch a revolutionary marketing automation engine and streamline operations globally.

Be the first to know about new B2B SaaS Marketing insights to build or refine your marketing function with the tools and knowledge of today’s industry.